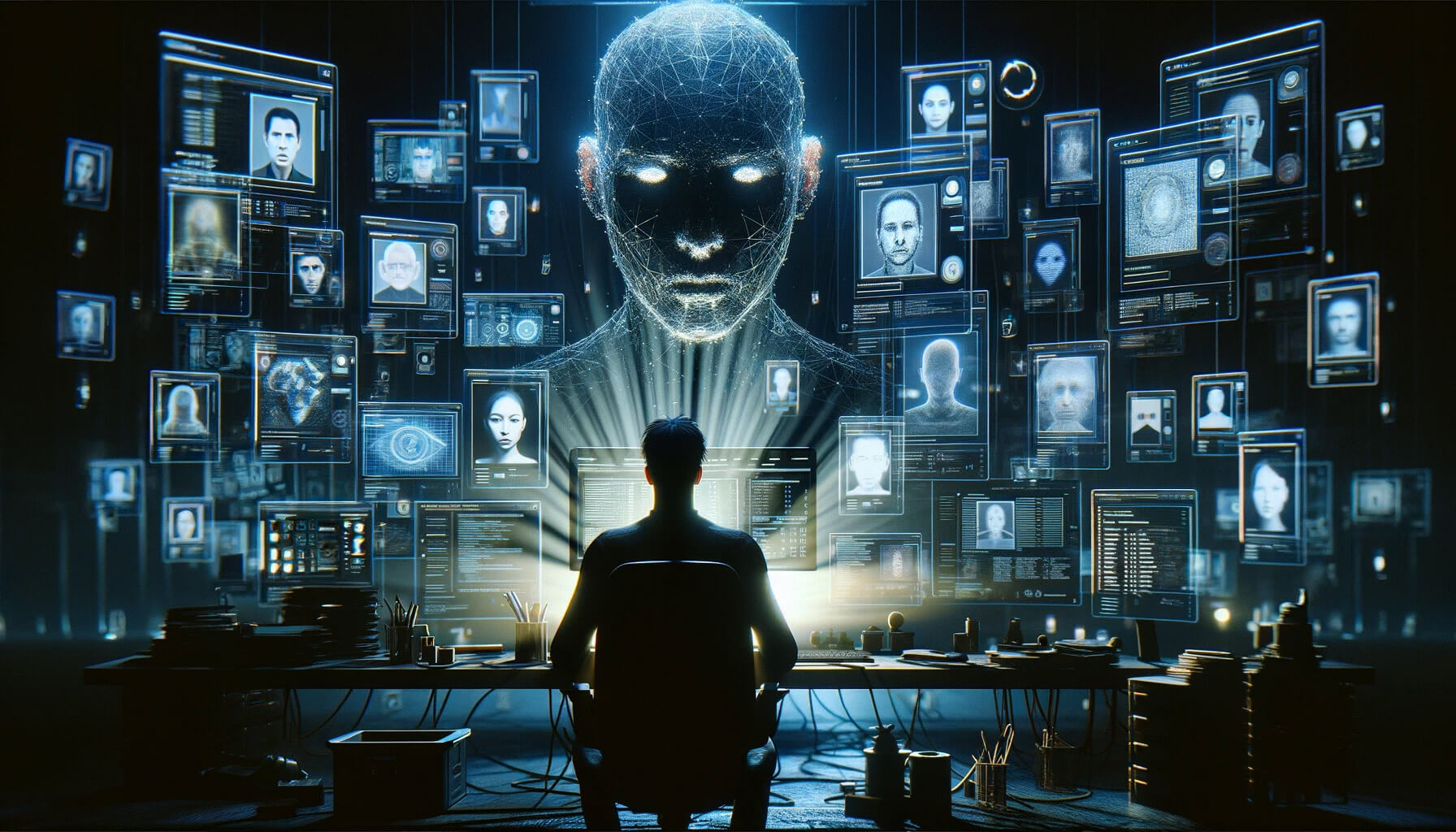

Coin Center’s Director of Research, Peter Van Valkenburgh, has issued a stark warning regarding the escalating threat posed by artificial intelligence (AI) in fabricating identities.

Valkenburgh sounded the alarm after an investigative report revealed details surrounding an underground website called OnlyFake, which claims to employ “neural networks” to create convincingly realistic fake IDs for a mere $15.

Identity fraud via AI

OnlyFake’s method represents a seismic shift in creating fraudulent documents, drastically lowering the barrier to committing identity fraud. Traditional means of producing fake IDs require considerable skill and time, but with OnlyFake, almost anyone can generate a high-quality phony ID in minutes.

This ease of access could potentially streamline various illicit activities, from bank fraud to money laundering, posing unprecedented challenges to traditional and digital institutions.

In an investigative effort, 404 Media confirmed the effectiveness of these AI-generated IDs by successfully passing OKX’s identity verification process. The ability of OnlyFake to produce IDs that can fool verification systems highlights a significant vulnerability in the methods used by financial institutions, including crypto exchanges, to prevent fraud.

The service provided by OnlyFake, detailed by an individual known as John Wick, leverages advanced AI techniques to generate a wide range of identity documents, from driver’s licenses to passports, for numerous countries. These documents are visually convincing and created with efficiency and scale previously unseen in fake ID production.

The inclusion of realistic backgrounds in the ID images adds another layer of authenticity, making the fakes harder to detect.

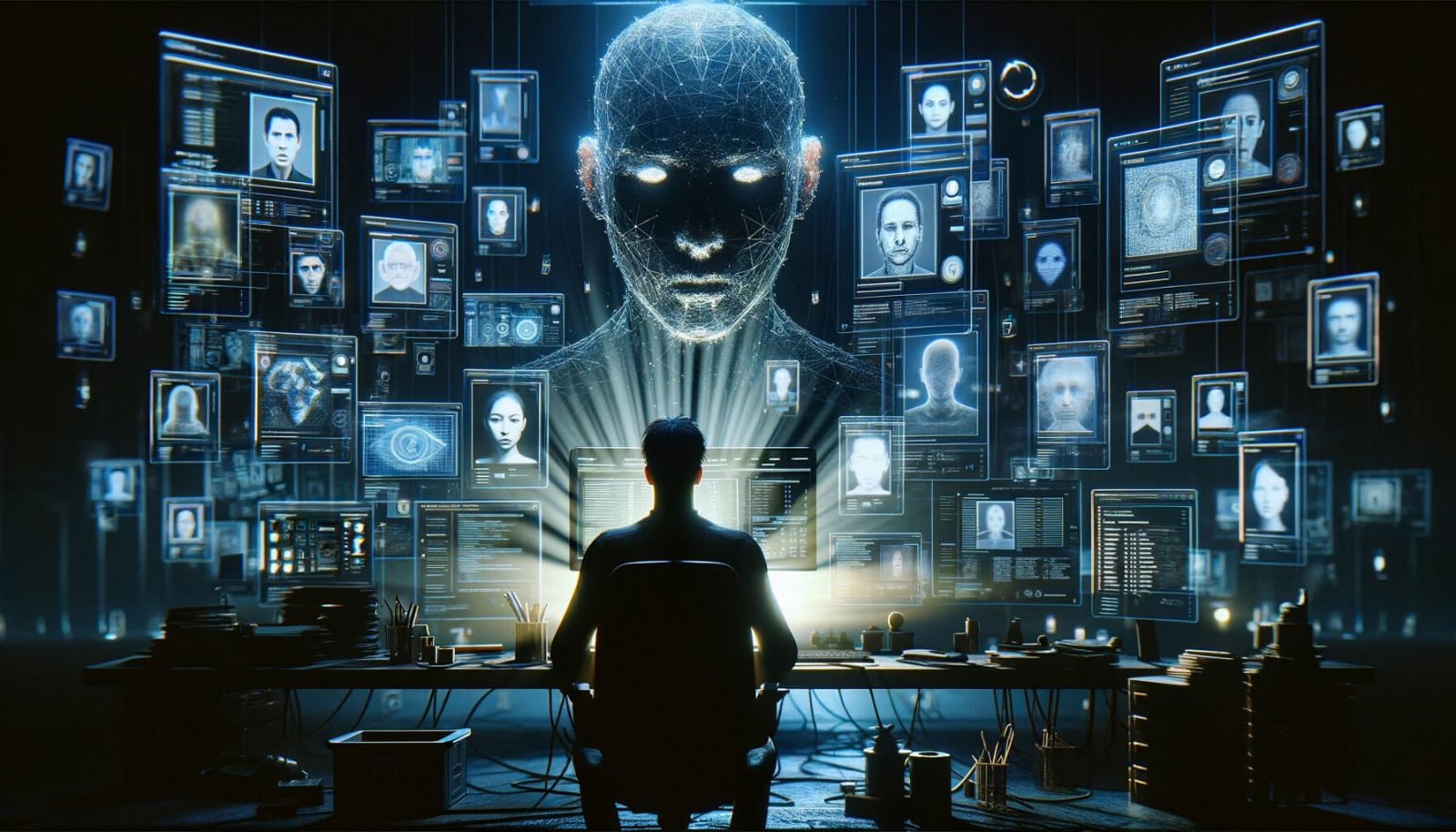

Cybersecurity arms race

This development raises serious concerns about the effectiveness of current identity verification methods, which often rely on scanned or photographed documents. The ability of AI to create such realistic forgeries calls into question the reliability of these processes and highlights the urgent need for more sophisticated measures to combat identity fraud.

Valkenburgh believes that cryptocurrency technology might solve this burgeoning problem, which is worth considering. Blockchain and other decentralized technologies provide mechanisms for secure and verifiable transactions without traditional ID verification methods, potentially offering a way to sidestep the vulnerabilities exposed by AI-generated fake IDs.

The implications of this technology extend beyond the realm of financial transactions and into the broader landscape of online security. As AI continues to evolve, so will the methods used by individuals with malicious intent.

The emergence of services like OnlyFake is a stark reminder of the ongoing arms race in cybersecurity, highlighting the need for continuous innovation in combating fraud and ensuring the integrity of online identity verification systems.

The rapid advancement of AI in creating fake identities not only poses a direct challenge to cybersecurity measures but also underscores the broader societal implications of AI technology. As institutions grapple with these challenges, the dialogue around AI’s role in society and its regulation becomes increasingly pertinent. The case of OnlyFake serves as a critical example of the dual-use nature of AI technologies, capable of both significant benefits and considerable risks.

Leave a Reply